Benchmark Testing

Running longitudinal usability tests

At Zapier, we utilized A/B testing as we developed and iterated on features. While this approach was beneficial, it had a significant drawback: it led teams to focus on individual features in isolation. Consequently, it became easy to hyper-optimize for local metrics while losing sight of the broader user experience. This issue was especially pronounced when multiple independent teams were making simultaneous changes to the application.

Recognizing that we lacked an effective method to monitor the overall user experience, I proposed and conducted benchmark usability tests to gain a comprehensive understanding of the user experience.

What's a benchmark test?

Benchmark usability testing is designed to monitor macro-level changes in a product's user experience. Imagine conducting a usability test script multiple times a year, using the same questions and tasks.

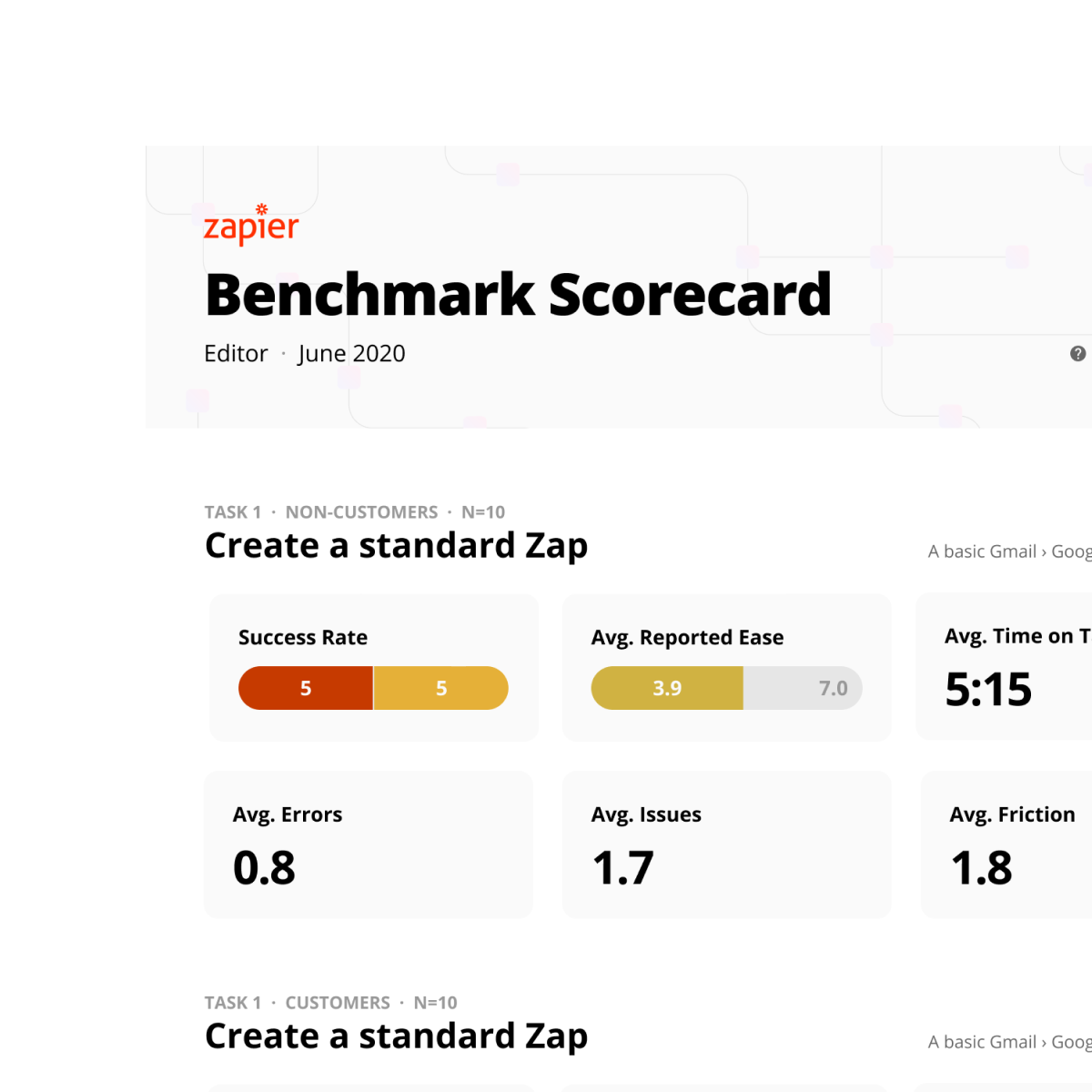

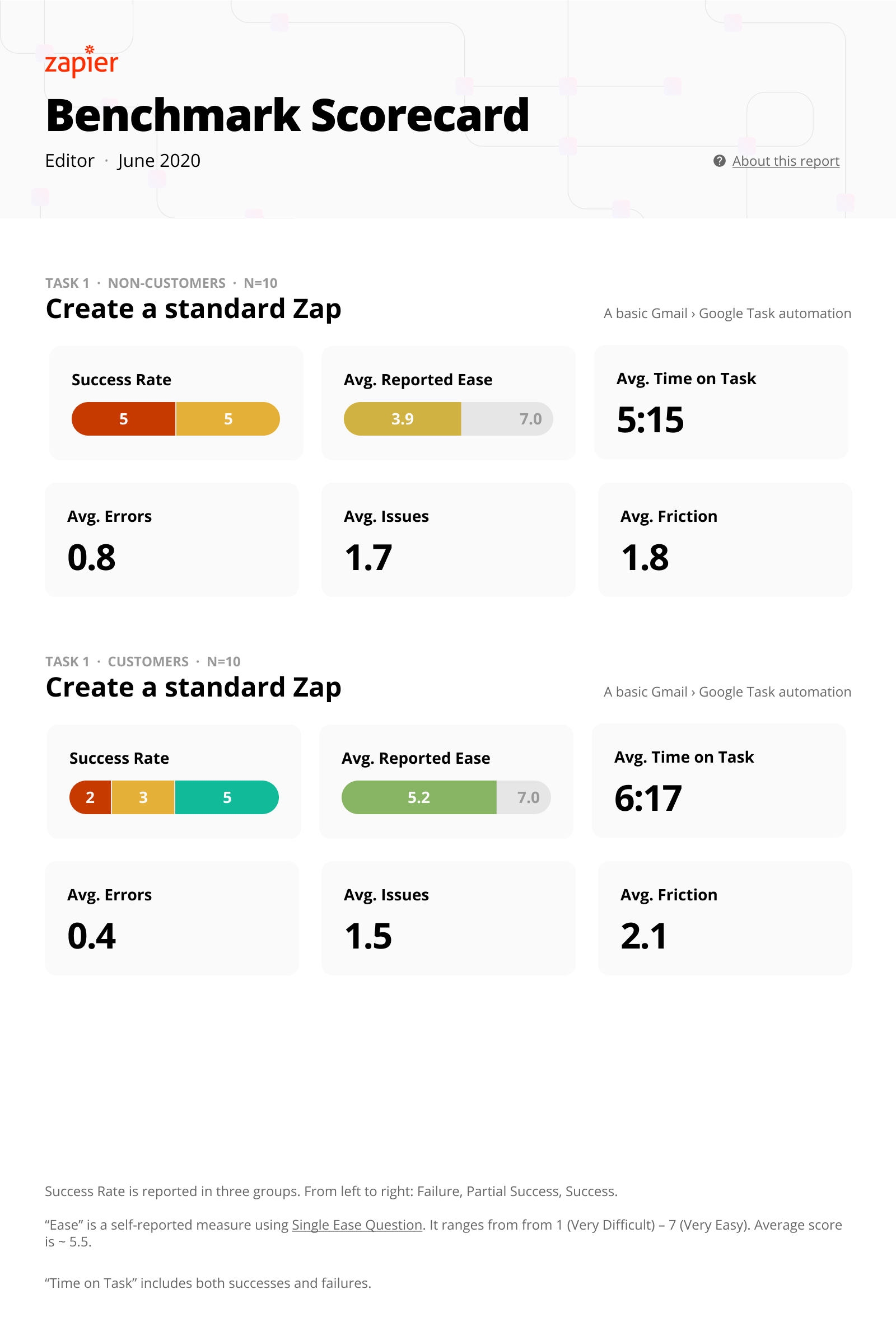

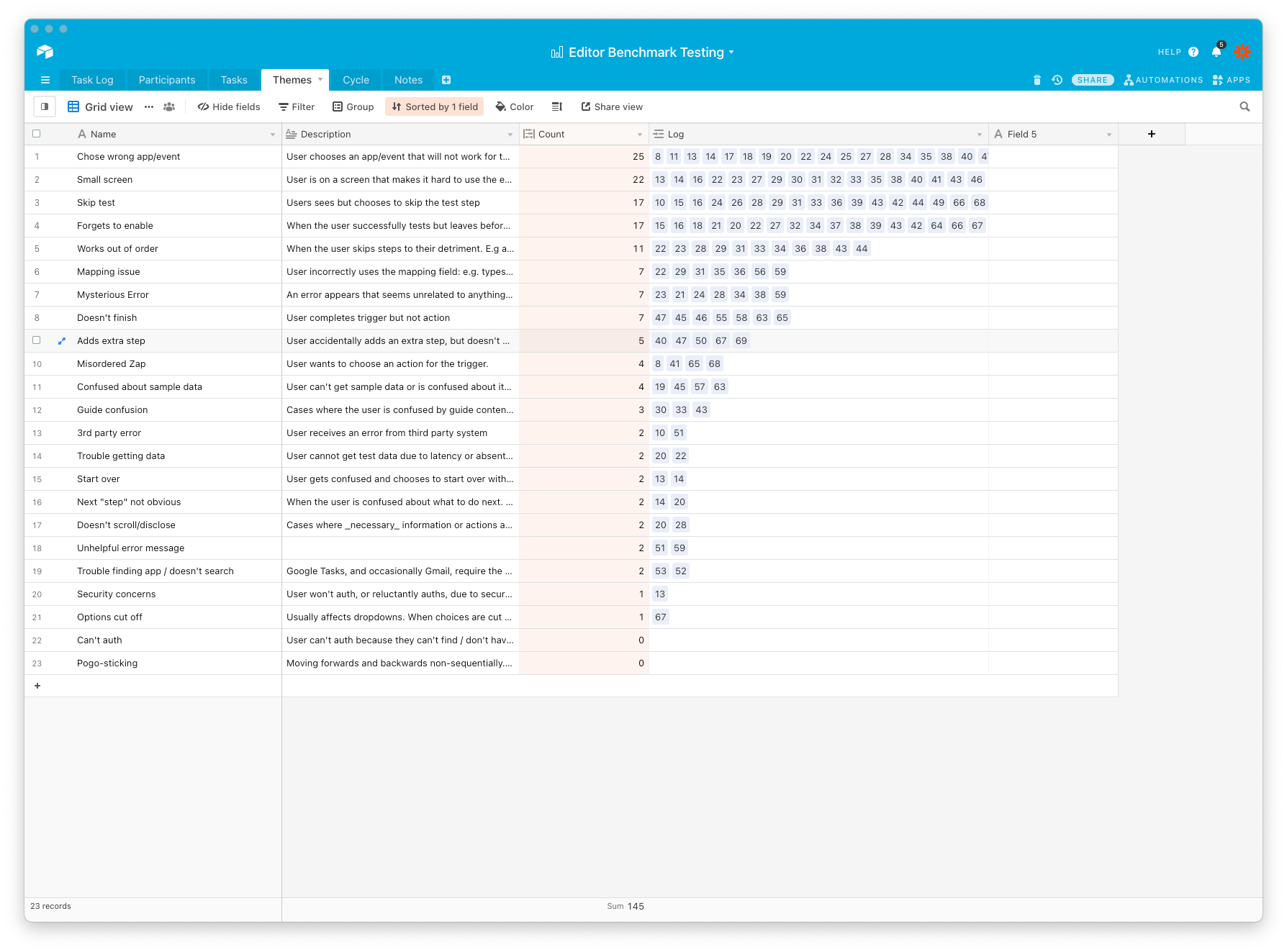

The outcome is a scorecard and a report that can be compared with previous studies to track the evolution of the user experience. I used this deliverable to highlight recently released work that seemed to impact the metrics.

How I conducted this study

Benchmark testing requires repeatability to compare results from one period to the next and observe changes. This involved:

- Automation: To minimize facilitator bias, I designed the test to be completely unmoderated, using UserTesting.com. This also had a practical benefit: facilitating the test could be time-consuming — unmoderated sessions removed that constraint.

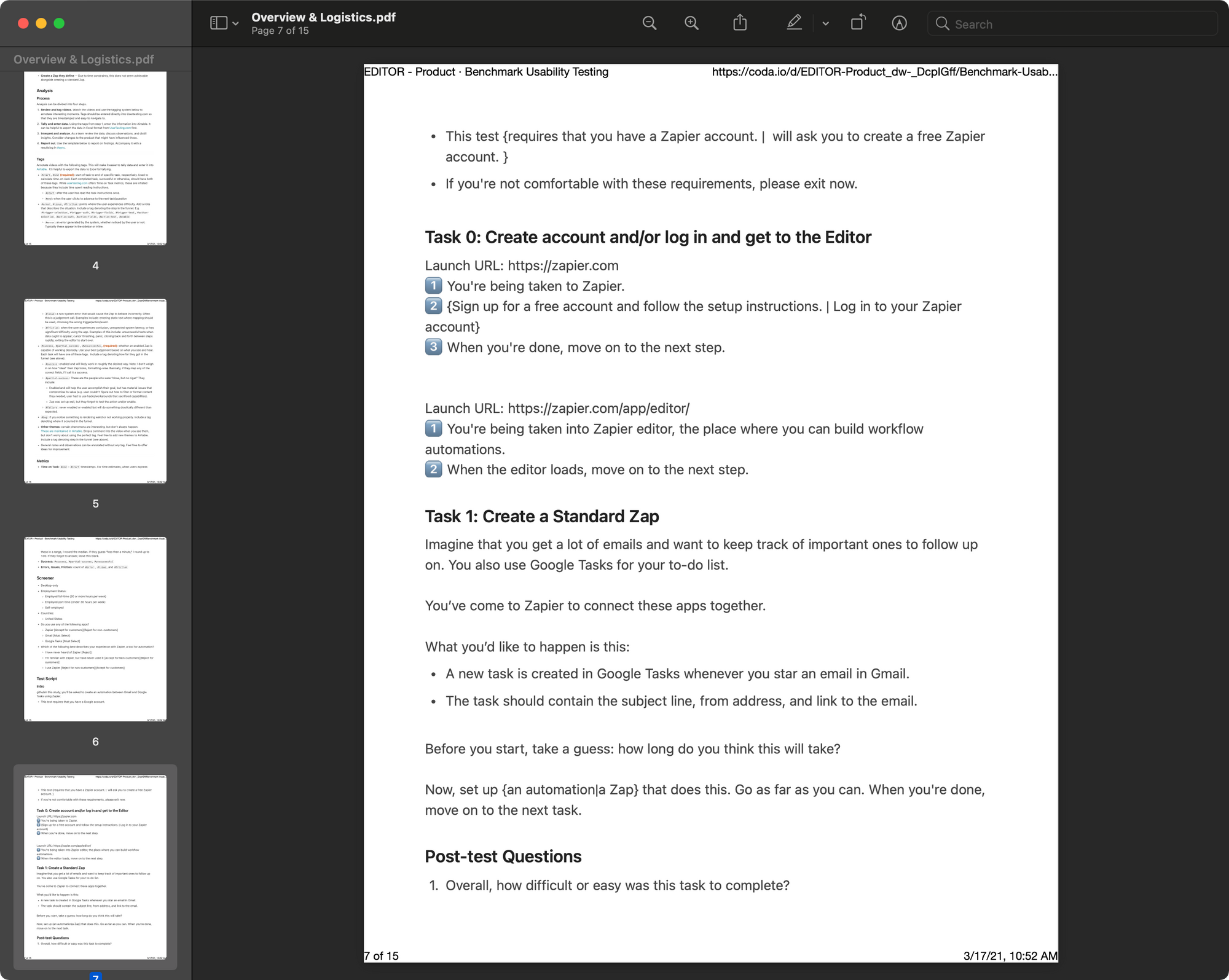

- Protocol: I developed a set of tasks that any new user could complete without any prerequisites. For instance, participants should not require any special accounts or domain knowledge to complete the tasks.

- Analysis: I created a rubric and data dictionary so that other researchers could analyze sessions using consistent criteria. This was to reduce the risk of bias from different individuals interpreting things differently.

- Reporting: I developed tools that automatically generated the benchmark report metrics. These values could be easily inserted into a Figma template and shared quickly.

Below are some artifacts from the process.

Overall

When multiple product teams operate in isolation, it's crucial to have mechanisms in place to monitor the overall experience. Benchmark testing provided a relatively automated method to stay informed about the user experience. It also complemented purely quantitative dashboards by providing more context about issues.